Exploring BlockBook: Architecture, Dockerization, and Overcoming Challenges

Welcome to the first article in our series exploring the BlockBook project. We'll dive into its architecture, our approaches to Dockerization, and the challenges we faced along the way.

Articles in the Series

To help you get started with deploying and managing BlockBook, here are the articles in this series:

- Running BlockBook as a Systemd Service on a VM | EC2 Instance: Learn how to configure and manage BlockBook as a systemd service on virtual machines and EC2 instances, ensuring reliable and automated operations.

- Running BlockBook as a Docker Container on a VM | EC2 Instance: Discover the steps and best practices for deploying BlockBook using Docker containers, including configuration and management on various types of servers.

These articles will build on the foundation laid here and provide practical insights for efficient deployment and management.

BlockBook App Architecture

BlockBook consists of two main components:

Backend

The backend is FLO blockchain's core wallet. But what exactly is a core wallet for a blockchain? In essence, it's the fundamental software that allows the blockchain to function. It handles all the basic operations, such as creating and broadcasting transactions, maintaining the blockchain, and validating blocks.

Key components include:

- flod: The FLO daemon, similar to Bitcoin's bitcoind.

- flo-cli: The command-line interface for interacting with flod.

The backend communicates through RPC (Remote Procedure Call) calls and relies heavily on its configuration file, which we'll refer to frequently in this blog series. Typically, it's started through a systemd service on Debian or Ubuntu-based OS.

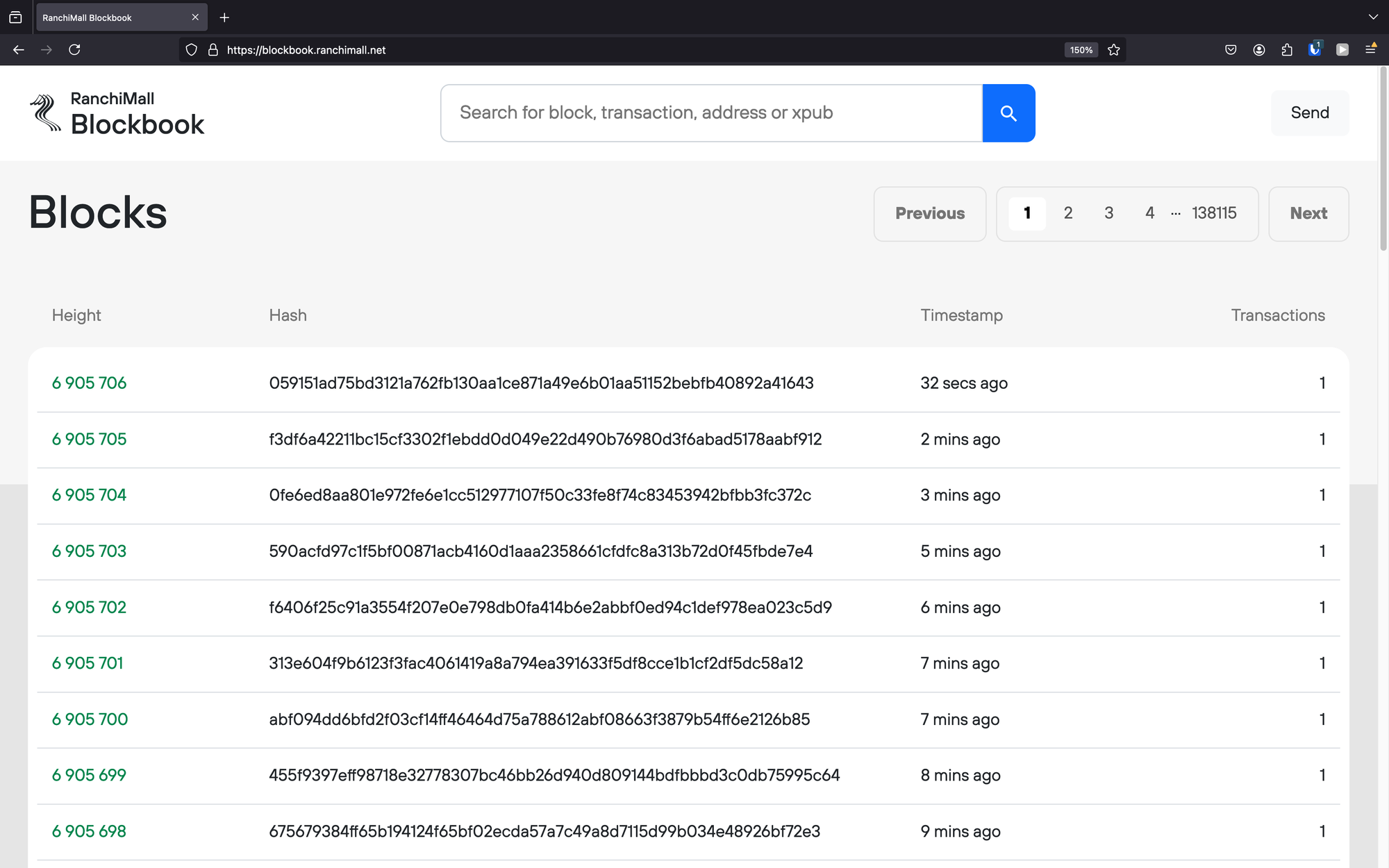

BlockBook (Frontend + API)

BlockBook also encompasses a frontend explorer and an API. The explorer displays blocks, transactions, and details on the UI, while the API provides JSON REST API outputs for programmatic use.

Communication and Ports

Understanding how these two parts interact is crucial. We’ll discuss the ports used and how they discover and communicate with each other.

Ports

Mainnet

- Backend runs on ports:

backend_rpc: 8066backend_message_queue: 38366

- BlockBook runs on ports:

- Internal: 9066

- Public: 9166

Testnet

- Backend runs on ports:

backend_rpc: 18066backend_message_queue: 48366

- BlockBook runs on ports:

- Internal: 19066

- Public: 19166

The configuration files where these details are specified are located at:

- Mainnet:

configs/coins/flo.json - Testnet:

configs/coins/flo_testnet.json

Why Containerize BlockBook?

The decision to containerize BlockBook was driven by several factors:

- Server Overloading: Our VM running both testnet and mainnet versions of the app was frequently overloaded. We needed a streamlined way to handle such situations efficiently.

- Scalability and Resilience: Containerizing allows us to leverage orchestration tools like Kubernetes in the future, providing more scalable and resilient deployments.

Dockerization Approaches

We experimented with two Dockerization approaches:

- Separate Containers: Running the backend and frontend in separate containers that communicate with each other.

- Single Container: Combining both components into a single container.

Challenges with Containers

Containers introduced their own set of challenges:

- Startup Time: Containers can take a while to start. Managing dependencies and ensuring one container waits for another led us to implement health checks and use Docker Compose effectively.

- Bootstrap and Long Wait Times: Handling long wait times during bootstrapping required innovative solutions to prevent manual restarts.

We'll explore how we addressed these challenges using health checks in Docker Compose.

This overview sets the stage for deeper dives into each topic in future posts. Stay tuned as we continue to explore the intricacies of BlockBook and our journey towards a more stable and scalable system architecture.